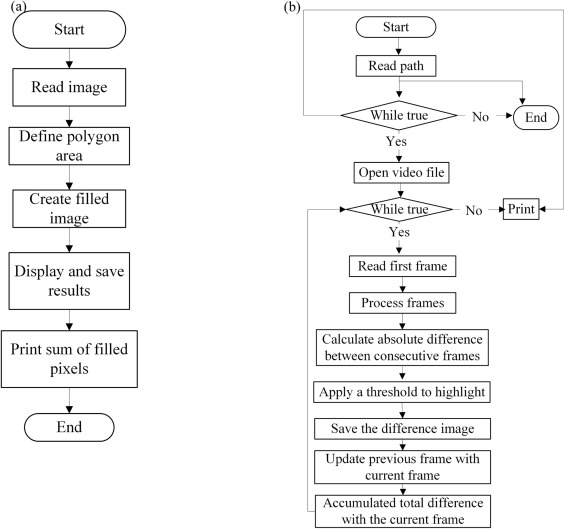

Heat stress is a major welfare problem in the poultry industry, altering broilers’ activity levels. Advancements in image processing and machine learning provide opportunities to automatically quantify and analyze broiler activity. This study aimed to evaluate the effects of moderate heat exposure on broiler behavioral activity via image processing and machine learning. 132 Cobb 500 broilers were raised in 2 nutritional treatment groups, each with 3 replicates. The control groups were fed a basal diet, while the variation groups were fed a diet with 0.05 % 25-hydroxyvitamin D3. All birds were raised under standard environmental conditions for 27 days before exposure to cyclic heat of 29.56 ± 1.34 °C and humidity of 76.97 % ± 5.98 % from 8:00–18:00 and thermoneutral conditions of 26.67 ± 1.76 °C and 80.23 % ± 3.05 % from 18:00–8:00. Birds were continuously video recorded, and the bird activity index (BAI) was analyzed by subtracting consecutive frames and summing up pixel differences. The treatment effect was analyzed using two-way ANOVA with a P-value < 0.05. K-means clustering was used to determine BAI as high, medium, and low levels. The result showed a significantly higher (P < 0.01) activity index in the variation group in contrast to the control. Absolute values of high and medium BAI were significantly lower with cyclical heating operations than those without heating operations. The BAI was also higher at the onset and end of the heating operations and moderately correlated to flock age (|r| = 0.35–0.45). The high, medium, and low BAI performed differently with different nutritional treatments, temperature ranges, and relative humidity ranges. It is concluded that the BAI is a useful tool for predicting broiler heat stress, but the prediction effectiveness could be influenced by bird age, diets, temperature, humidity, and behavior metrics.

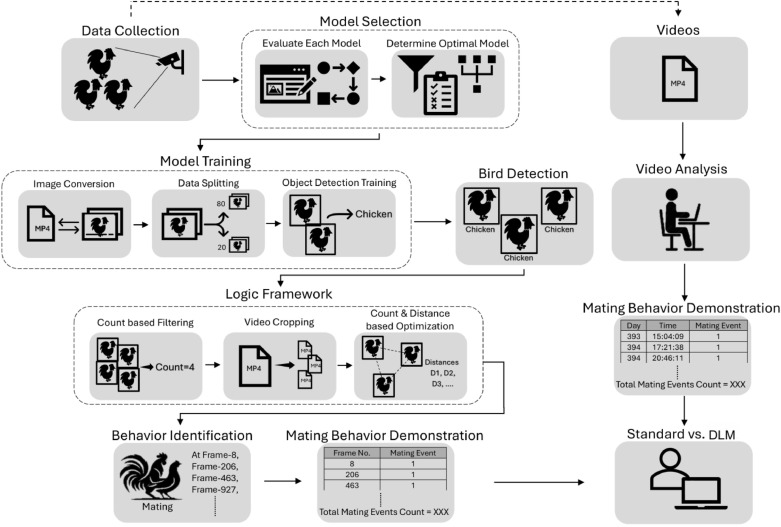

Mating behaviors are crucial for bird welfare, reproduction, and productivity in breeding flocks. During mating, a rooster mounts a hen, which may result in the hen overlapping or disappearing from top-view of a vision system. The objective of this research was to develop Deep learning models (DLM) to identify mating behavior based on bird count changes and bio-characteristics of mating. Twenty broiler breeder hens and 2-3 roosters (56 weeks) of the Ross 708 breed were monitored in four experimental pens. The DLM framework included a bird detection model, data filtering algorithms based on mating duration, and logic frameworks for mating identification based on bird count changes. Pretrained models of object detection (You Only Look Once Version 7 and 8, YOLOv7 and YOLOv8), tracking (YOLOv7 or YOLOv8 with Deep Simple Online Real-time Tracking (SORT), StrongSORT, and ByteTrack), and segmentation (Segment Anything Model2 (SAM2), YOLOv8-segmentation, Track Anything) were comparatively evaluated for bird detection, and YOLOv8l object detection model was selected due to balanced performance in processing speed (8 seconds per frame) and accuracy (75 % Mean Average Precision (mAP)). With custom training, the best performance of detecting broiler breeders via YOLOv8l was over 0.939 precision, recall, mAP50, mAP95, and F1 score for training and 0.95 positive and negative predicted values for testing. After comparing 24 scenarios of mating duration and 32 scenarios of time interval, a mating duration of 3-9 seconds and the time intervals of T-3 to T+12 seconds based on manual observation were incorporated into the framework to filter out unnecessary data and retain keyframes for further processing, significantly reducing the processing speed by a factor of 10. The optimized framework was effectively able to detect the birds and identify the mating behavior with 0.92 accuracy compared to other YOLO detection plus logic frameworks. Mating event identification via the developed DLM framework fluctuated among different time of a day and bird ages due to bird overlapping, gathering densities, and occlusions. By automating this process, breeders can efficiently monitor and analyze mating behaviors, facilitating timely interventions and adjustments in housing and management practices to optimize broiler breeder fertility, genetics, and overall productivity.

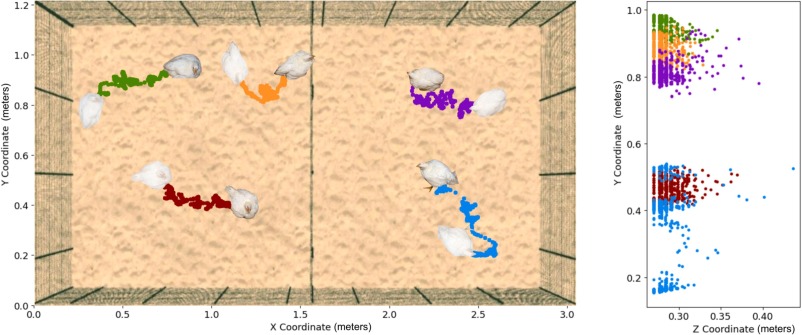

Effective monitoring systems are crucial for improving poultry management and welfare. However, despite the enhanced analytics provided by 3D systems over 2D, affordable options remain limited due to unresolved design and algorithm challenges. The objective of this study was to develop a low-cost intelligent system to monitor the 3D features of poultry. The system consisted of data storage, a mini-computer, and electronics housed in a plastic box, with an RGB-D camera externally connected via USB for flexible installation. Python scripts on a Linux-based Robotic Operating System (ROS Noetic) were developed to automatically capture 3D data, transfer it to storage, and notify a manager when storage is full. Various 3D cameras, installation heights (2.25, 2.50, 2.75, and 3.00 m), image resolutions, and data compression settings were tested using a robotic vehicle in a 1.2 m × 3.0 m pen to simulate broiler movement in controlled environments. Optimal configurations, based on the quality of 3D point clouds, were tested in several broiler trials including one containing 1,776 Cobb 500 male broiler chickens. Results showed that the integrated L515 camera provided clearer features and superior 3D point cloud quality at 2.25 m, capturing an average of 1641 points per frame. Additionally, data compression reduced RGB frame storage by 75%, enabling efficient long-term storage without compromising data quality. During broiler house testing with 1,776 Cobb 500 male broilers, the system demonstrated stable and reliable operation, recording 1.65 TB of data daily at 15 FPS with a 20 TB hard drive, allowing for 12 consecutive days of uninterrupted monitoring. Among object detection models tested, YOLOv8m (a medium-sized version of the YOLO version 8 model) outperformed other models by achieving a precision of 89.2% and an accuracy of 84.8%. Depth-enhanced modalities significantly improved detection and tracking performance, especially under challenging conditions. YOLOv8m achieved 88.2% detection accuracy in darkness compared to 0% with RGB-only data, highlighting the advantage of integrating depth information in low-light environments. Further evaluations showed that incorporating depth modalities also improved object detection in extreme lighting scenarios, such as overexposure and noisy color channels, enhancing the system’s robustness to environmental variations. These results demonstrated that the system was well-suited for accurately capturing 3D data across diverse conditions, providing reliable detection, tracking, and trajectory extraction. The system effectively extracted 3D walking trajectories of individual chickens, enabling detailed behavioral analysis to monitor health and welfare indicators. The system, costing approximately $1,221, integrates cost-effective hardware with a scalable software architecture, enabling precision monitoring in large-scale operations. By reducing storage costs to $28 per day and compressing data without losing critical details, the system is well-suited for practical deployment in poultry farms. The outlined evaluation and customization process ensures that this framework can be adapted to other agricultural or industrial applications, paving the way for intelligent and scalable monitoring systems. These advancements provide a robust foundation for improving animal management, enhancing productivity, and addressing welfare concerns in precision agriculture.

Abstract: Nesting behaviors are important to understand facility design, resource allowance, animal welfare, and the health of broiler breeder hens. How to automatically extract informative nesting behavior metrics of broiler breeder hens remains a question. The objective of this work was to quantify the nesting behavior metrics of broiler breeder hens using computationally efficient image algorithms and big data analytics. Here, 20 broiler breeder hens and 1–2 roosters were raised in an experimental pen, and four pens equipped with six-nest-slot nest boxes were used for analyzing the nesting behaviors of broiler hens over the experimental period. Cameras were installed on the top of the nest boxes to monitor the hens’ behaviors, such as the time spent in the nest slot, frequency of visits to the nest slot, simultaneous nesting pattern, hourly time spent by the hens in each nest slot, and time spent before and after feed withdrawal, and videos were continuously recorded for nine days for nine hours a day when the hens were 56 weeks of age. Image processing algorithms, including template matching, thresholding, and contour detection, were developed and applied to quantify the hen nesting behavior metrics frame by frame. The results showed that the hens spent significantly different amounts of time and frequencies in different nest slots (p < 0.001). A decrease in the time spent in all nest slots from 1 pm to 9 pm was observed. The nest slots were not used 60.1% of the time. Overall, the proposed method is a helpful tool to quantify the nesting behavior metrics of broiler breeder hens and support precision broiler breeder management.

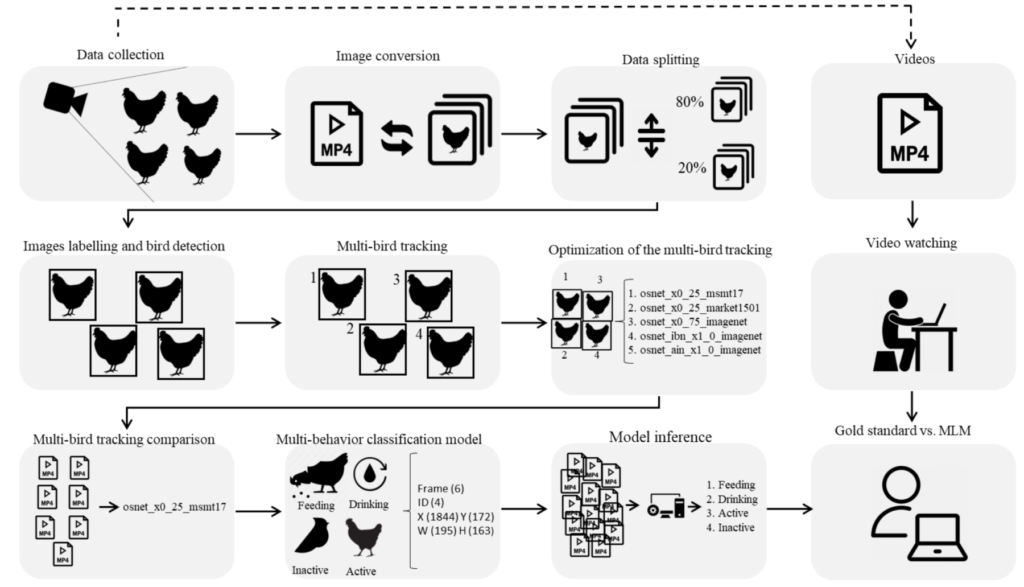

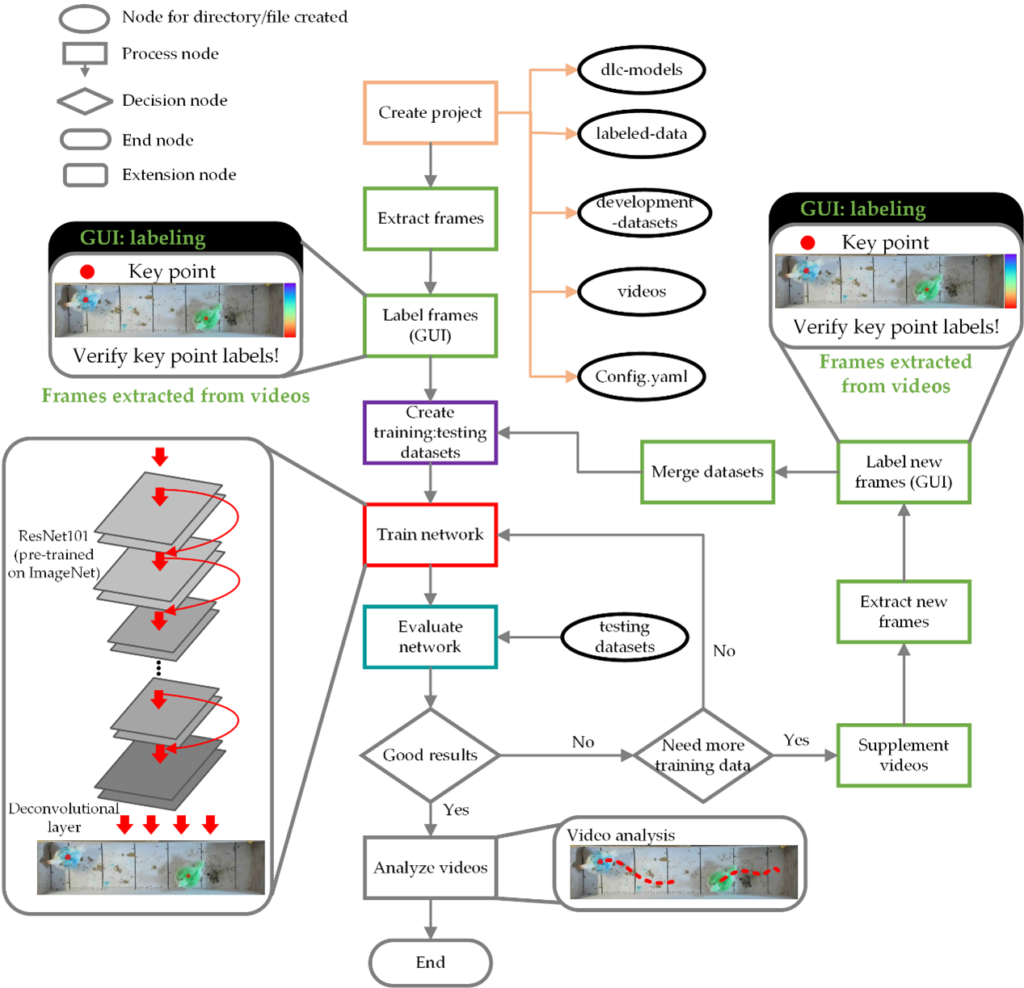

Abstract: Animals’ individual behavior is commonly monitored by live or video observation by a person. This can be labor intensive and inconsistent. An alternative is the use of machine learning-based computer vision systems. The objectives of this study were to 1) develop and optimize machine learning model frameworks for detecting, tracking and classifying individual behaviors of group-housed broiler chickens in continuous video recordings; and 2) use an independent dataset to evaluate the performance of the developed machine leaning model framework for individual poultry behaviors differentiation. Forty-two video recordings from 4 different pens (total video duration = 1,620 min) were used to develop and train multiple models for detecting and tracking individual birds and classifying 4 behaviors: feeding, drinking, active, and inactive. The optimal model framework was used to continuously analyze an external set of 11 videos (duration = 326 min), and the second-by-second behavior of each individual broiler was extracted for the comparison of human observation. After comparison of model performance, the YOLOv5l, out of 5 detection models, was selected for detecting individual broilers in a pen; the osnet_x0_25_msmt17, out of 4 tracking algorithms, was selected to track each detected bird in continuous frames; and the Gradient Boosting Classifier, out of 12 machine learning classifiers, was selected to classify the 4 behaviors. Most of the models were able to keep previously assigned individual identifications of the chickens in limited amounts of time, but lost the identities throughout an examination period (≥4 min). The final framework was able to accurately predict feeding (accuracy = 0.895) and drinking time (accuracy = 0.9) but subpar for active (accuracy = 0.545) and inactive time (accuracy = 0.505). The algorithms employed by the machine learning models were able to accurately detect feeding and drinking behavior but still need to be improved for maintaining individual identities of the chickens and identifying active and inactive behaviors.

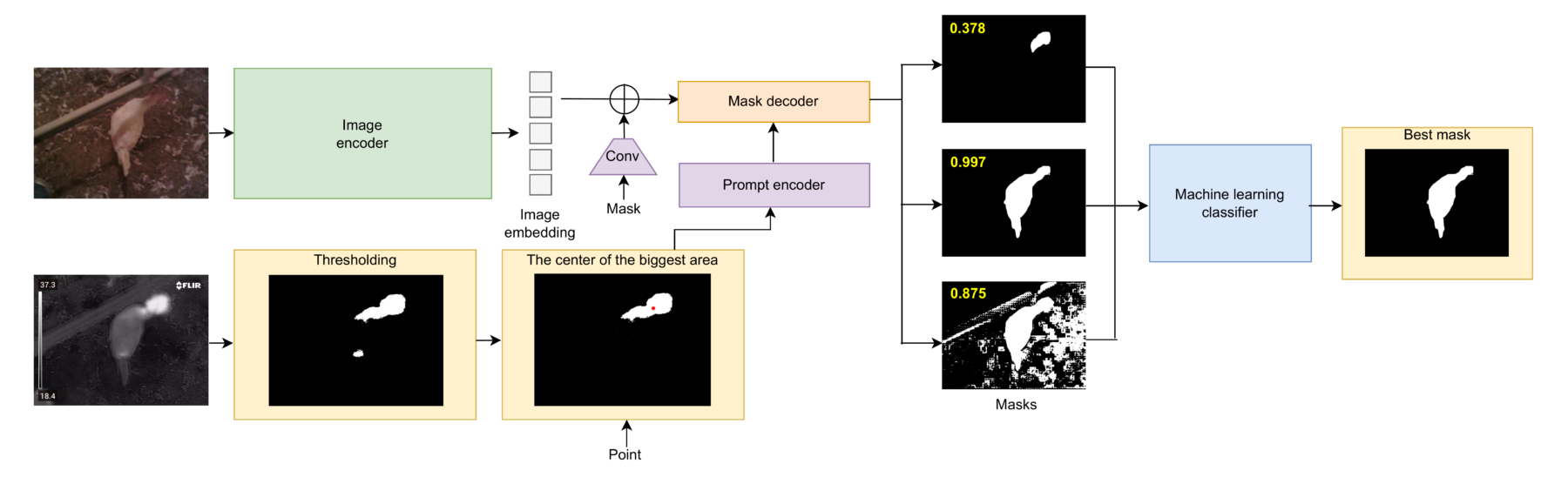

Abstract: Body temperature is a critical indicator of the health and productivity of egg-laying chickens and other domesticated animals. Recent advancements in thermography allow for precise surface temperature measurement without physical contact with animals, reducing animal stress from human handling. Gold standard temperature analysis via thermography requires manual selection of limited points for an object of interest, which could be time-consuming and inadequate for representing the comprehensive thermal profile of a chicken’s body. The objective of this study was to leverage and optimize a zero-shot artificial intelligence technology for the automatic segmentation of individual cage-free laying hens within thermal images, providing insights into their overall thermal conditions. A zero-shot image segmentation model (Segment Anything, “SAM”) was modified by replacing manual selections of target points with automatic selection of the initial point using pre-processing techniques (e.g., thresholding) in each thermal image. The model was also incorporated with post-processing techniques integrated with a machine learning classifier to improve segmentation accuracy. Three versions of modified SAM models (i.e., SAM, FastSAM, and MobileSAM), two common instance segmentation algorithms (i.e., YOLOv8 and Mask R-CNN), and two foundation segmentation models (i.e., U2-Net and ISNet) were comparatively evaluated to determine the optimal one for bird segmentation from thermal images. A total of 1,917 thermal images were collected from cage-free laying hens (Hy-Line W-36) at 77–80 weeks of age. The image dataset exhibited considerable variations such as feathers, bird movement, body gestures, and the specific conditions of cage-free facilities. The experimental results demonstrate that the modified SAM did not only surpass the six zero-shot models—YOLOv8, Mask R-CNN, FastSAM, MobileSAM, U2Net, and ISNet—but also outperformed other modified SAM-based models (Modified FastSAM and Modified MobileSAM) in terms of hen detection performance, achieving a success rate of 84.4 %, and in segmentation performance, with an intersection over union of 85.5 %, recall of 91.0 %, and an F1 score of 92.3 %. The optimal model, modified SAM, was pipelined to extract statistics including the averages (°C) of mean (27.03, 27.04, 28.53, 26.68), median (26.27, 26.84, 28.28, 26.78), 25th percentile (25.33, 25.61, 27.26, 25.53), and 75th percentile (28.04, 27.95, 29.22, 27.55) of surface body temperature of individual laying hens in thermal images for each week. More statistics of hen body surface temperature can be extracted based on the segmentation results. The developed pipeline is a useful tool for automatically evaluating the thermal conditions of individual birds.

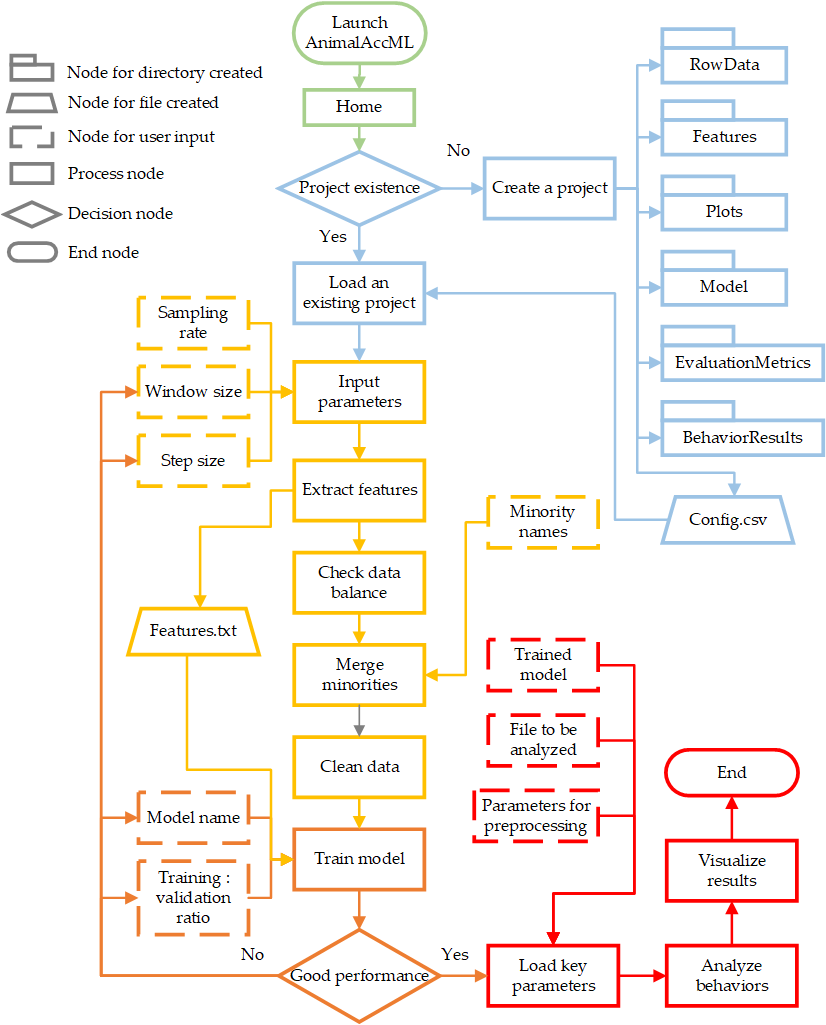

Abstract: Automated collection of accelerometer data and subsequent machine learning modeling are prevalent combined methods for animal behavior recognition. However, there is a lack of customized tools for user-friendly machine learning model development. Meanwhile, existing models in previous research could not be directly used for behavior interpretation. The objective of this study was to design and develop a tool for customized machine learning model development and animal behavior analysis using triaxial accelerometer data. A graphical user interface was programmed with Python and saved in a public repository for open access. The interface mainly consists of pages of ‘Manage Project’, ‘Preprocess Data’, ‘Develop Models’, and ‘Analyze Behavior’. An open dataset containing triaxial accelerometer data of six beef cattle was used to test the developed interface. The main results show that users can customize appropriate machine learning models for behavior analytics through several mouse clicks on the interface. A total of 15 models can be selected and trained to determine an optimal one, and model performance can be optimized by adjusting parameters of window size, step size, and training-to-validation ratio. Data imbalance can be solved by merging minority classes into one. The newly developed model has the capacity to analyze overall behavior time budget, statistics (e.g., mean, minimum, maximum, and standard deviation) of each behavior duration, and frequency of behavior sequences. The tool is supportive for automated animal behavior analytics critical to enhancing animal welfare, housing environment, genetics selection, and flock management.

Abstract: Gait scoring is a useful measure for evaluating broiler production efficiency, welfare status, bone quality, and physiology. The research objective was to track and characterize spatiotemporal and three-dimensional locomotive behaviors of individual broilers with known gait scores by jointly using deep-learning algorithms, depth sensing, and image processing. Ross 708 broilers were placed on a platform specifically designed for gait scoring and manually categorized into one of three numerical scores. Normal and depth cameras were installed on the ceiling to capture top-view videos and images. Four birds from each of the three gait-score categories were randomly selected out of 70 total birds scored for video analysis. Bird moving trajectories and 16 locomotive-behavior metrics were extracted and analyzed via the developed deep-learning models. The trained model gained 100% accuracy and 3.62 ± 2.71 mm root-mean-square error for tracking and estimating a key point on the broiler back, indicating precise recognition performance. Broilers with lower gait scores (less difficulty walking) exhibited more obvious lateral body oscillation patterns, moved significantly or numerically faster, and covered more distance in each movement event than those with higher gait scores. In conclusion, the proposed method had acceptable performance for tracking broilers and was found to be a useful tool for characterizing individual broiler gait scores by differentiating between selected spatiotemporal and three-dimensional locomotive behaviors.

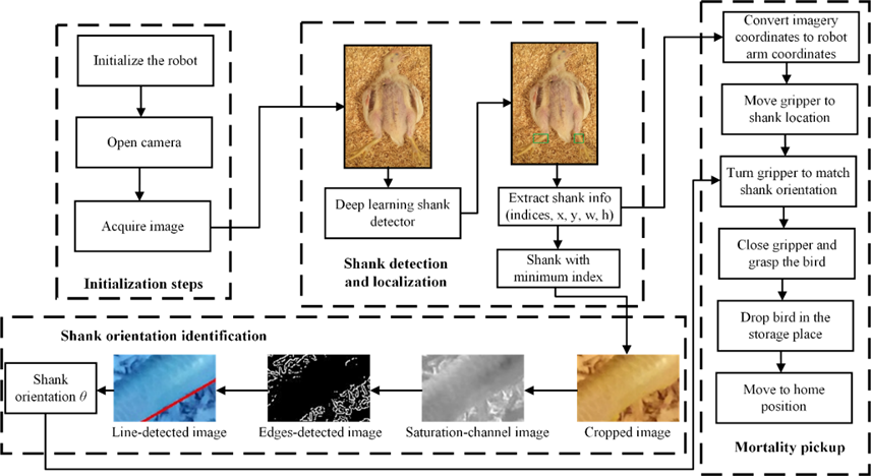

Design and development of a broiler mortality removal robot (Li et al, 2022)

Abstract: Manual collection of broiler mortality is time-consuming, unpleasant, and laborious. The objectives of this research were: (1) to design and fabricate a broiler mortality removal robot from commercially available components to automatically collect dead birds; (2) to compare and evaluate deep learning models and image processing algorithms for detecting and locating dead birds; and (3) to examine detection and mortality pickup performance of the robot under different light intensities. The robot consisted of a two-finger gripper, a robot arm, a camera mounted on the robot‘s arm, and a computer controller. The robot arm was mounted on a table, and 64 Ross 708 broilers between 7 and 14 days of age were used for the robot development and evaluation. The broiler shank was the target anatomical part for detection and mortality pickup. Deep learning models and image processing algorithms were embedded into the vision system and provided location and orientation of the shank of interest, so that the gripper could approach and position itself for precise pickup. Light intensities of 10, 20, 30, 40, 50, 60, 70, and 1000 lux were evaluated. Results indicated that the deep learning model “You Only Look Once (YOLO)” V4 was able to detect and locate shanks more accurately and efficiently than YOLO V3. Higher light intensities improved the performance of the deep learning model detection, image processing orientation identification, and final pickup performance. The final success rate for picking up dead birds was 90.0% at the 1000-lux light intensity. In conclusion, the developed system is a helpful tool towards automating broiler mortality removal from commercial housing, and contributes to further development of an integrated autonomous set of solutions to improve production and resource use efficiency in commercial broiler production, as well as to improve well-being of workers.

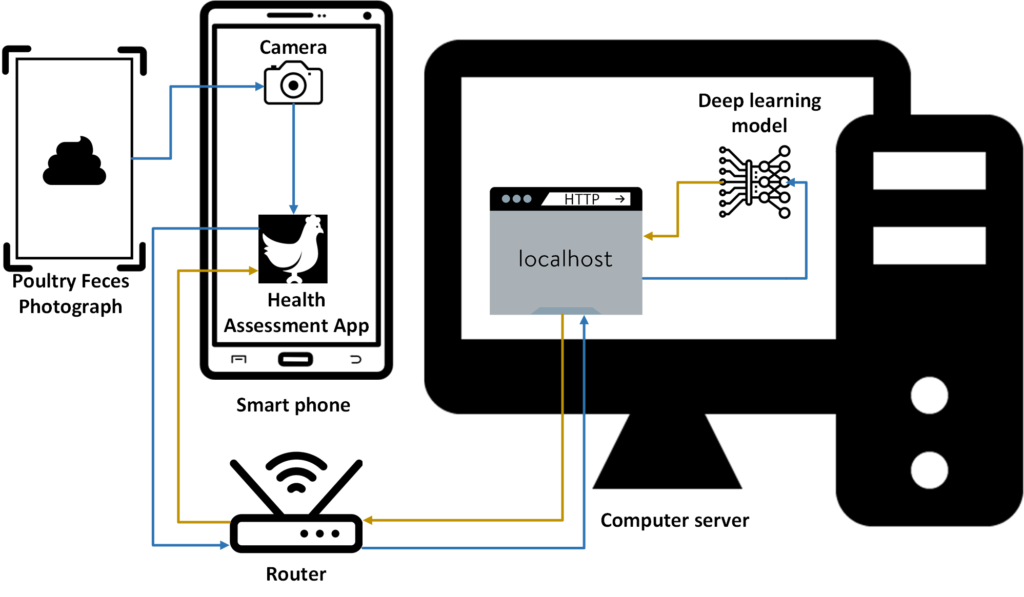

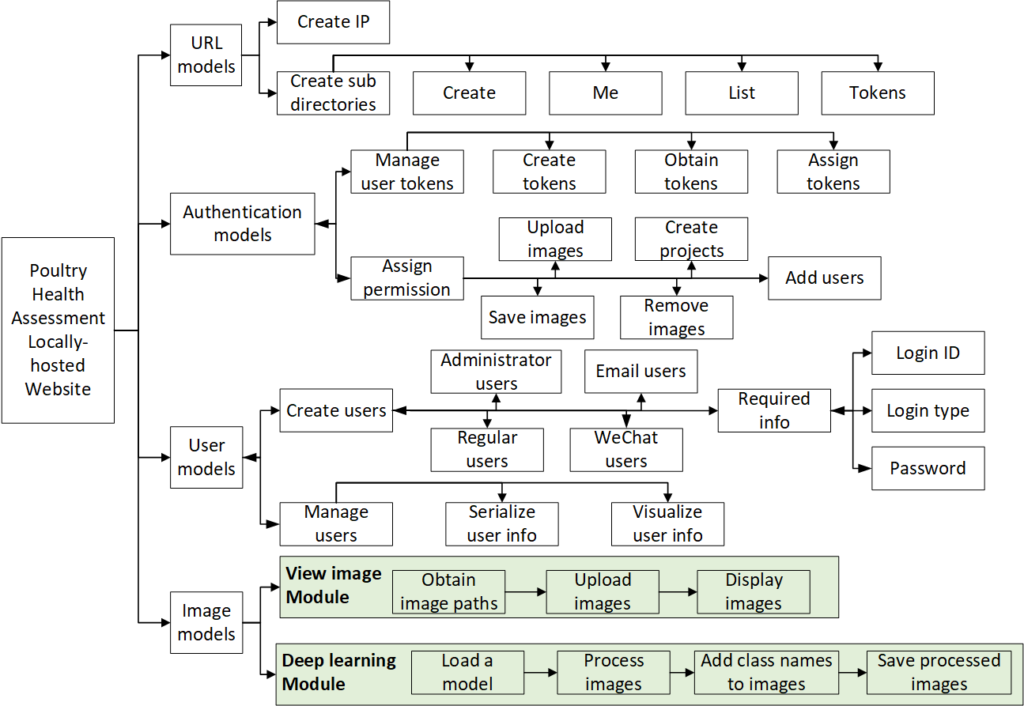

Abstract: Rapid and accurate chicken health assessment can assist producers in making timely decisions, reducing disease transmission, improving animal welfare, and decreasing economic loss. The objective of this research was to develop and evaluate a proof-of-concept mobile application system to assist caretakers in assessing chicken health during their daily flock inspections. A computer server was built to assign users with different usage credentials and receive uploaded fecal images. A dataset containing fecal images from healthy and unhealthy birds (infected with Coccidiosis, Salmonella, and Newcastle disease) was used for classification model development. The modified MobileNetV2 model with additional layers of artificial neural networks was selected after a comparative evaluation of six models. The developed model was embedded into a local server for image classification. An application was developed and deployed, allowing a user with the application on a mobile device to upload a fecal image to a website hosted on the server and receive results processed by the model. Health status is transferred back to the user and can be shared with production managers. The system achieved over 90% accuracy for identifying diseases, and the whole operational procedure took less than one second. This proof-of-concept demonstrates the feasibility of a potential framework for mobile poultry health assessment based on fecal images. However, further development is needed to expand applicability to different production systems through the collection of fecal images from various genetic lines, ages, feed components, housing backgrounds, and flooring types in the poultry industry and improve system performance.

Classifying Ingestive Behavior of Dairy Cows via Automatic Sound Recognition (Li et al,., 2021)

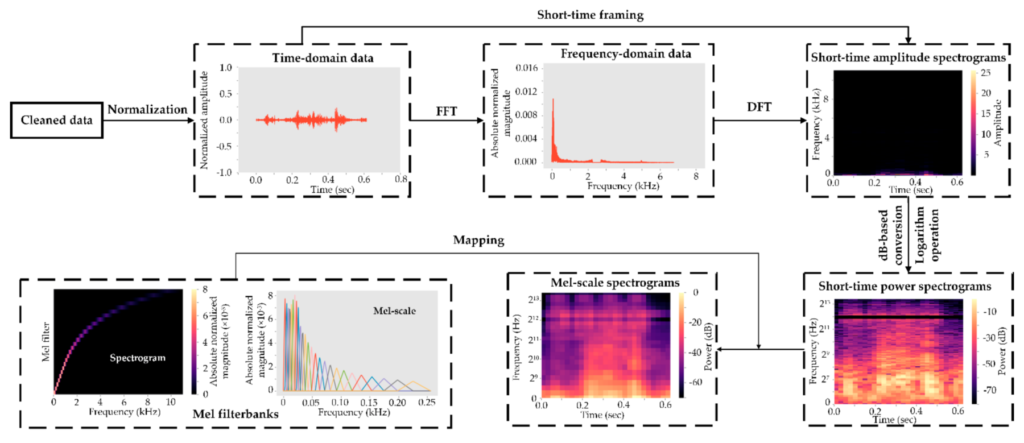

Abstract: Determining ingestive behaviors of dairy cows is critical to evaluate their productivity and health status. The objectives of this research were to (1) develop the relationship between forage species/heights and sound characteristics of three different ingestive behaviors (bites, chews, and chew-bites); (2) comparatively evaluate three deep learning models and optimization strategies for classifying the three behaviors; and (3) examine the ability of deep learning modeling for classifying the three ingestive behaviors under various forage characteristics. The results show that the amplitude and duration of the bite, chew, and chew-bite sounds were mostly larger for tall forages (tall fescue and alfalfa) compared to their counterparts. The long short-term memory network using a filtered dataset with balanced duration and imbalanced audio files offered better performance than its counterparts. The best classification performance was over 0.93, and the best and poorest performance difference was 0.4–0.5 under different forage species and heights. In conclusion, the deep learning technique could classify the dairy cow ingestive behaviors but was unable to differentiate between them under some forage characteristics using acoustic signals. Thus, while the developed tool is useful to support precision dairy cow management, it requires further improvement.