U.S. egg production is shifting from conventional caged systems to cage-free systems, largely due to growing concerns about animal welfare. Over the past decade, the market share of non-organic cage-free eggs has increased by 5–10%, reaching 40% by 2025. However, cage-free housing is associated with higher rates of mortality and injury, often linked to poor management practices. One common issue is keel bone damage, which may result from hens perching or jumping between tiers in aviary systems. During pullet rearing (before 17 weeks of age), ramps are typically provided to help birds access upper tiers (Figure 1). However, in most commercial cage-free layer houses, ramps are removed once hens reach laying age (18 weeks or older), based on the assumption that their wing strength is sufficient for navigation.

Figure 1. Ramps for cage-free hens/pullets (photo credit: Big Dutchman).

This change may contribute to the higher incidence of keel bone damage observed in cage-free systems compared to conventional cages. Additionally, cage-free houses report up to 10% of eggs laid on the floor, potentially due to hens struggling to access elevated nest boxes. These issues highlight the need to investigate hens’ use of ramps in cage-free environments and explore how system design can improve both keel bone health and floor egg management.

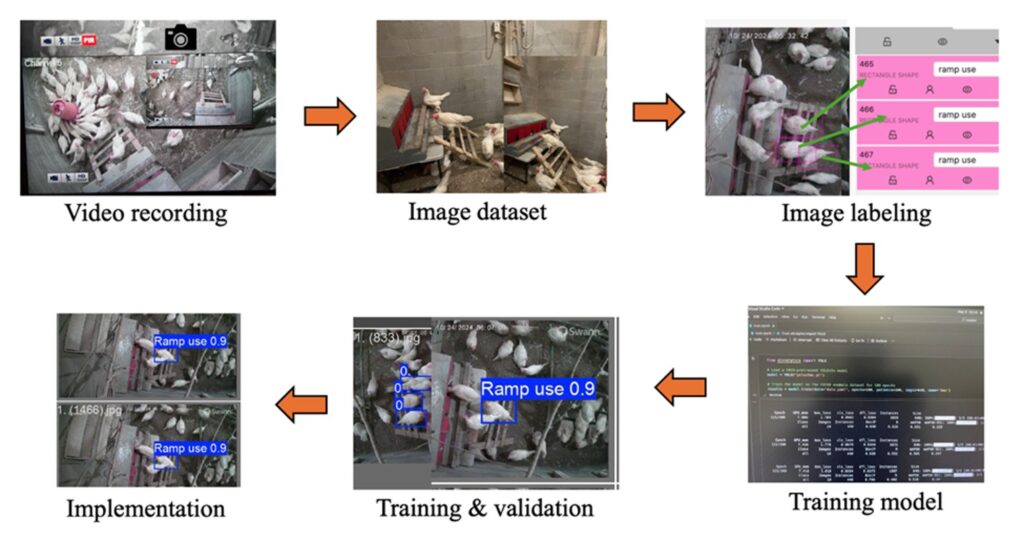

Researchers at the University of Georgia developed a deep learning technology (using both YOLOv5u and YOLO11 models) for monitoring the ramp use behavior of cage-free hens in a research chicken facility (Figure 2). Night-vision surveillance cameras were used to capture a video of the study rooms. Especially, 4 cameras on the ceiling, 3m above the litter floor, placed right above nest boxes at the four corners of each study room. The cameras continuously recorded RGB videos for 24 hours a day throughout the study period and were stored in .avi format with a resolution of 1920 ´ x 1080 pixels at 15 frames per second (fps).

Figure 2. The workflow for ramp using detection in laying hens using both YOLOv5u and YOLO11 models.

Video datasets obtained from the experiment were converted into individual image files in .jpg format using Python 3.13, at a frame extraction rate of 15 fps, and 3000 images were randomly selected. The resulting images were then manually reviewed to make sure images included ramps and birds using a ramp, and overall image quality, resulting in 2000 images. We used an open-source image labeling tool, ‘cvat.ai’, for labeling training and validation image datasets, and labeled manually by drawing bounding boxes around the hens using ramps by a single trained researcher on this work to maintain consistency while drawing bounding boxes. The hens using the ramp were labelled as ‘ramp use’ and the annotations were downloaded in YOLO format for model training (70%), validation (20%), and testing (10%) (Paneru et al., 2024a; 2024b, 2025). Images that were under testing datasets were never seen by the model during training and validation to see how the model performs on completely unseen data before. Five variants of both YOLOv5u and YOLO11 models, i.e., nano (n), small (s), medium (m), large (l), and extra-large (x) were trained on the annotated images for 200 epochs, with a batch size of 16 and a constant learning rate of 0.01. Python 3.13 was utilized for training, validation, and testing purposes.

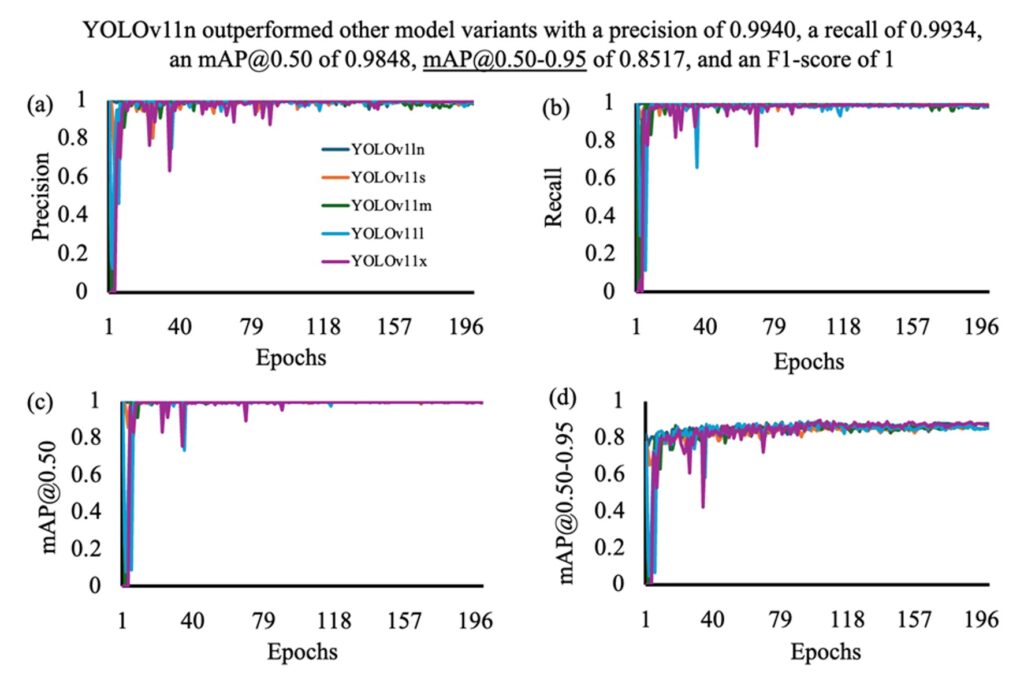

When comparing the performances of YOLO11 model variants, the YOLO11n model outperformed all other variants, with small variations across performance metrics. A closer look at its outcomes highlights a precision of 0.9940, a recall of 0.9934, mAP@0.50 of 0.9848, and mAP@0.50-0.95 of 0.8517 as the number of epochs increased (Figure 3). The performance metrics of YOLO11 varied among model variants as YOLO11m and YOLO11x variants resulted in the lowest precision among other model variants of YOLO11, i.e., a precision of 0.96, while other variants, such as YOLO11s, resulted in a precision of 0.9829, and the YOLO11l variant resulted in a precision of 0.9756. The YOLOv5nu and YOLO11n variants’mAP@0.50 score of (0.9834, and 0.9538) for ramp use detection further highlight the ability of these models to detect ramp use instances by laying hens with a high confidence score compared to other variants of YOLOv5u and YOLO11 models employed in this study. YOLOv5lu had the lowest performance metrics compared to other variants of YOLOv5u and YOLO11 models. It achieved a precision of 0.9553, a recall of 0.9545, an mAP@0.50 of 0.9447, an mAP@0.50-0.95 of 0.8229, and an F1 score of 0.99.

Figure 3. Performance metrics such as (a) precision, (b) recall, (c) mAP@0.50, and (d) mAP@0.50-0.95, of the YOLOv11 model versus the number of epochs in detecting ramp use by the Lohmann LSL Lite hens in a CF facility. Where mAP refers to mean average precision.

This study provides reference for optimizing ramp design and applied behavior monitoring to improve animal welfare and floor egg management in cage-free houses.

Further reading:

Paneru, B., Yang, X., Dhungana, A., Dahal, S., Ritz, C.W., Kim, W., Liu, T. and Chai, L., 2025. Monitoring the Ramp Use of Cage-free Laying Hens with Deep Learning Technologies. Poultry Science, 105858. https://doi.org/10.1016/j.psj.2025.105858