Broiler production and issues: The world’s population is expected to reach 9.5 billion by 2050 and the requirement for animal products (e.g., meat, eggs, and milk) will be increased by 70% as compared to 2005 levels. As indicated, it is challenging to improve animal production efficiency and product quality under limited natural resources (e.g., fresh water, feed and land), thus precision poultry production is critical for addressing the issue. A key task of precision poultry production is monitoring animal behaviors for the evaluation of welfare and health status. Animal behavior in the poultry house could be used as an indicator of health and welfare status. In this study, a convolutional neural network models (CNN) network model was developed to monitor chicken behaviors (i.e., feeding, drinking, standing, and resting). Videos of broilers at different ages were used to build datasets for training the new model, which was compared to several other deep learning frameworks in behavior monitoring. In addition, an attention mechanism module was introduced into the new model to further analyze the influence of attention mechanism on the performance of the network model. This study provides a basis for innovating approach for poultry behavior detection in commercial houses.

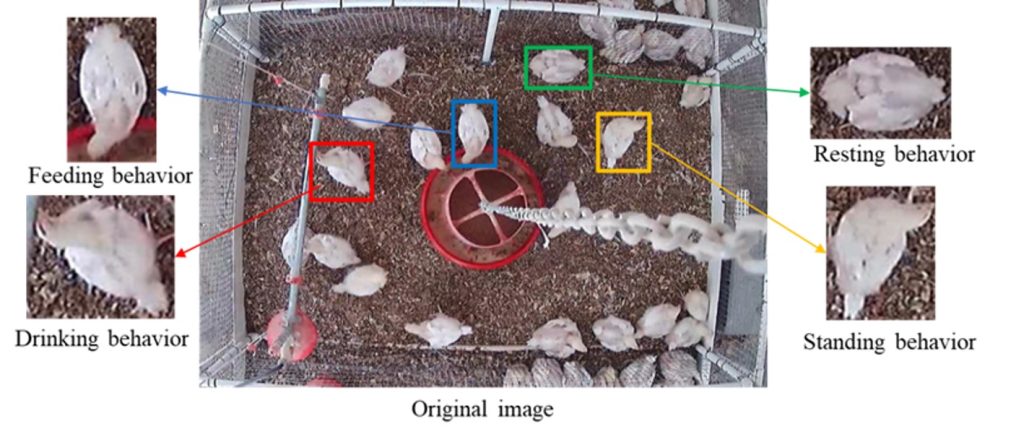

Deep Learning Model and Test Results: The image collection was conducted in a research broiler house (20 birds per pen) on the Poultry Research Farm at the University of Georgia, Athens, USA. Unless otherwise stated, the experimental setup and data were the same as previously published [9]. High definition (HD) cameras (PRO-1080MSFB, Swann Communications, Santa Fe Springs, CA, USA) were mounted on the ceiling (2.5 m above floor) to capture video (15 frame/s, 1440 pixels × 1080 pixels) for broilers from day 1 to day 50. The images of d2, d9, d16 and d23 were selected. In each stage, 300 images of each of the four broilers behaviors (feeding, drinking, standing, and resting) were segmented, totaling 4800 images. Figure 1 shows the example of broiler behaviors sample segmentation on d16.

Figure 1. Data collection and analysis.

To obtain more sufficient behavioral features, original videos were image augmented based on multi-pose and multi-angle situations of broilers. Firstly, 50 images were randomly selected from each category in the original dataset (about 800 images) as the testing dataset. Then, contrast enhancement by 20% and decrease by 20%, brightness enhancement by 20% and decrease by 20%, rotate 90°, 180° and 270°, Gaussian blur, Gaussian noise, a total of nine enhancement methods were adopted to the remaining images in the original data set. After image augmentation processing, each day had 10,200 images, of which 200 original images were used as the testing set for four behaviors. Then, 10,000 images were divided into training set and validation set at 4:1 ratio. The information of behavior dataset is shown in Table 1.

Table 1. Broiler behavior dataset information.

| Categories | d2/d9/d16/d23 Dataset | Description |

| Feeding | 2550/2550/2550/2550 | Body is next to the feeder and the head is above the feed |

| Drinking | 2550/2550/2550/2550 | Head is close to and towards the drinker |

| Standing | 2550/2550/2550/2550 | Body is still, and the head may turn slightly |

| Resting | 2550/2550/2550/2550 | Body is close to the ground, and the head may turn slightly |

| Training data | 8000/8000/8000/8000 | — |

| Validation data | 2000/2000/2000/2000 | — |

| Testing data | 200/200/200/200 | — |

All CNN comparison models were trained and tested on a GPU (Graphics Processing Unit) server that uses Python language and builds models based on the Pytorch 1.7.1 (Meta AI, Menlo Park, CA, USA) deep learning framework. Table 2 shows the detailed equipment configuration information.

Table 2. Hardware and software systems.

| Configuration Item | Value |

| CPU | Intel® Xeon(R) Gold 5217 CPU@3.00 GHz |

| GPU | Nvidia Tesla V100 (32 GB) |

| Operating System | Ubuntu 18.04.5 LTS 64 |

| RAM | 251.4 GB |

| Hard Disk | 8 TB |

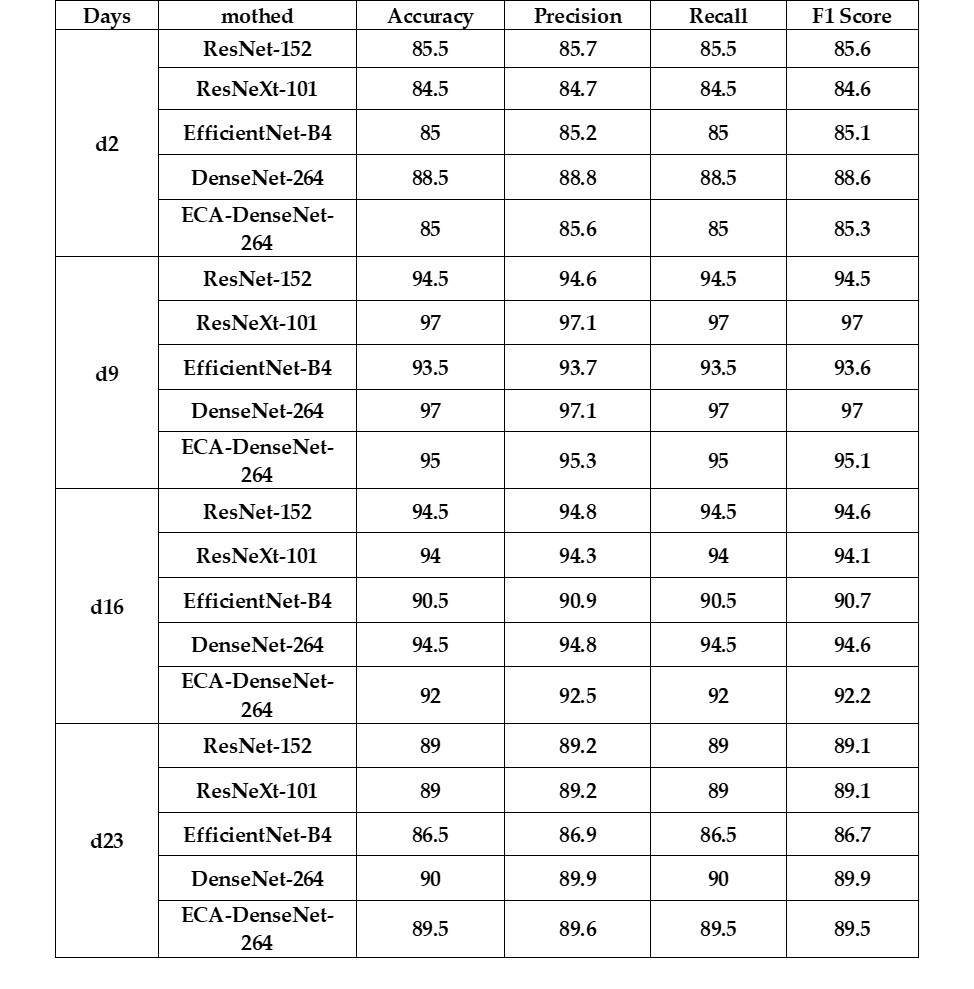

The recognition accuracy of the CNN models for the broiler datasets are illustrated in Table 3. In the dataset of d2, the DenseNet-264 achieved an accuracy of 88.5%, a precision of 88.8%, a recall of 88.5% and a F1 score of 88.6%, which was better than that of other comparison methods.

Table 3. Detection results of different deep learning models for broiler chickens.

Figure 2. Behavior classification results of the Densenet-264 in broilers dataset.

Figure 2 shows that the broilers drinking-, feeding-, resting- and standing- behavior were correctly classified. In addition, the accuracies of drinking and feeding behaviors were higher than resting and standing behaviors, because these behaviors are distinctly characterized by contact with feeders or drinkers.

Summary

This study evaluated methods for recognizing broiler behaviors using a newly trained CNN model framework (i.e., DenseNet-264 network) at different ages. Results show that the DenseNet-264 network model had the accuracy rates of 88.5%, 97%, 94.5%, and 90% on d2, d9, d16 and d23, respectively, which is better than other existing CNN models such as ResNet-152, ResNeXt-101, EfficientNet-B4 and ECA-DenseNet-264. The behavior recognition performance of Densenet-264 was also higher than other comparison methods from day 2 to day 23, especially for detecting standing and resting behavior.

Further reading:

Guo, Y., Aggrey, S.E., Wang, P., Oladeinde, A. & Chai, L. (2022). Monitoring behaviors of broiler chickens at different ages with deep learning. Animals, 12(23), 3390.